在k8s中部署zookeeper集群

根据业务要求,我们打算在k8s中以Deployment方式部署zk集群

注:并非使用stateful形式

以下是相关的 zk-service.yaml 和 zookeeper.yaml

apiVersion: v1

kind: Service

metadata:

name: zoo1

labels:

app: zookeeper-1

spec:

ports:

- name: client

port: 2181

protocol: TCP

- name: follower

port: 2888

protocol: TCP

- name: leader

port: 3888

protocol: TCP

selector:

app: zookeeper-1

---

apiVersion: v1

kind: Service

metadata:

name: zoo2

labels:

app: zookeeper-2

spec:

ports:

- name: client

port: 2181

protocol: TCP

- name: follower

port: 2888

protocol: TCP

- name: leader

port: 3888

protocol: TCP

selector:

app: zookeeper-2

---

apiVersion: v1

kind: Service

metadata:

name: zoo3

labels:

app: zookeeper-3

spec:

ports:

- name: client

port: 2181

protocol: TCP

- name: follower

port: 2888

protocol: TCP

- name: leader

port: 3888

protocol: TCP

selector:

app: zookeeper-3

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: zookeeper-deployment-1

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper-1

name: zookeeper-1

template:

metadata:

labels:

app: zookeeper-1

name: zookeeper-1

spec:

containers:

- name: zoo1

image: hbp/zookeeper:3.6.3

imagePullPolicy: IfNotPresent

ports:

- containerPort: 2181

env:

- name: ZOOKEEPER_ID

value: "1"

- name: ZOOKEEPER_SERVER_1

value: zoo1

- name: ZOOKEEPER_SERVER_2

value: zoo2

- name: ZOOKEEPER_SERVER_3

value: zoo3

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: zookeeper-deployment-2

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper-2

name: zookeeper-2

template:

metadata:

labels:

app: zookeeper-2

name: zookeeper-2

spec:

containers:

- name: zoo2

image: hbp/zookeeper:3.6.3

imagePullPolicy: IfNotPresent

ports:

- containerPort: 2181

env:

- name: ZOOKEEPER_ID

value: "2"

- name: ZOOKEEPER_SERVER_1

value: zoo1

- name: ZOOKEEPER_SERVER_2

value: zoo2

- name: ZOOKEEPER_SERVER_3

value: zoo3

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: zookeeper-deployment-3

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper-3

name: zookeeper-3

template:

metadata:

labels:

app: zookeeper-3

name: zookeeper-3

spec:

containers:

- name: zoo3

image: hbp/zookeeper:3.6.3

imagePullPolicy: IfNotPresent

ports:

- containerPort: 2181

env:

- name: ZOOKEEPER_ID

value: "3"

- name: ZOOKEEPER_SERVER_1

value: zoo1

- name: ZOOKEEPER_SERVER_2

value: zoo2

- name: ZOOKEEPER_SERVER_3

value: zoo3

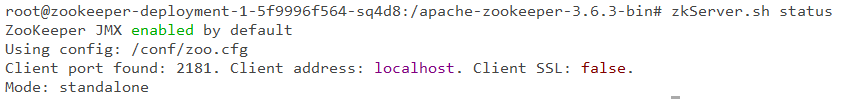

实践表明,这些deployments的确可以部署成功,但均基于单机(standalone)模式,它们彼此并没有通过其中的env servers联系起来,是否需要以ip地址或主机名联系起来组建成集群?而不是服务名zoo1/zoo2/zoo3

为啥要用Deployment部署呢,你上面的镜像被封装了,不知道内部的逻辑。

所以要关注镜像内

zoo.cfg是什么,它导致的。另外,推荐你2篇文章,安装zk的:

zookeeper:3.6.3 这个镜像就是官方的镜像,之前用stateful安装,那个用的我们业务不接受,说依赖组件太多,担心不稳定,你推荐的博文中应该是stateful方式部署的,st方式可以部署成功,现在主要要用deployment--我可以像zookeeper 3.4.10中那样在yaml中使用启动脚本command,并以 zkServer.sh start 方式部署么,可能要添加其它配置才能识别吧。

为何用env方式没能识别呢?

env: - name: ZOOKEEPER_ID value: "3" - name: ZOOKEEPER_SERVER_1 value: zoo1 - name: ZOOKEEPER_SERVER_2 value: zoo2 - name: ZOOKEEPER_SERVER_3 value: zoo3依赖组建太多?不理解指的的是什么额,我给你的那2个例子是官方提供的,是标准。

StatusfulSet就是为这个存在的,不然和Deployment有啥区别...

核心在于

zoo.cfg里面,想要识别,必须有下面的关系:$ cat zoo.cfg ... server.1=zookeeper-0.zk-hs.test.svc.cluster.local:2888:3888 server.2=zookeeper-1.zk-hs.test.svc.cluster.local:2888:3888 server.3=zookeeper-2.zk-hs.test.svc.cluster.local:2888:3888只能帮你到这里了,其他的你自己排查吧

主要是说要pv/pvc,需要依赖nfs, 目前nfs有些不稳定。

你说的很对,集群间节点实例需要能相互识别,跟zoo.cfg里的server1/2/3比较相关,这个貌似又不能放在启动脚本里(yaml)

你的答案